Sensational AG is the company I founded together with a collegue back in 2000. Ever since then, we had a very nice combination of fun, interesting work and a very successful business.

We’re a very small team – just eight programmers, one business guy, a product designer and a bloody excellent project manager. Me personally, I would love to keep the team as small and tightly-knit as possible as that brings huge advantages: No internal politics, a lot of freedoms for everybody and mind-blowing productivity.

I’m still amazed to see what we manage to do with our small team time and time again and yet still manage to keep the job fun. It’s not just the stuff we do outside of immediate work, like UT2004 matches, Cola Double Blind Tests, Drone Flights directly from the roof of our office, sometimes hosting JSZurich and meetups for the Zurich Clojure User group and much more – it’s also the work itself that we try to make as fun as possible for everybody.

We are looking for a new member to help us with technical support and smaller scale modifications to our main product, though there’s ample opportunity to grow into helping with bigger projects and getting ownership over pieces of our code-base.

Our main product is an ecommernce platform that’s optimized for wholesale customers. We’re not about presenting a small amount of product in the most enticing manner, but we’re into helping our end users to be as efficient and quick as possible to deal with their big orders (up to 400 line items per week).

Our customers have relatively large amounts of data for us to handle (the largest data set is 2.3 TB in size). I’m always calling our field “medium data” – while it might still fit into memory, it’s definitely too big to deal with it in the naïve way, so it’s not quite big-data yet, but it’s certainly in interesting spheres.

We’re in the comfortable position that the data entrusted to us is growing in the speed that we’re able to learn how to deal with it and so is our architecture. What started as a simple PHP-in-front-of-PostgreSQL deal back in 2004 by now has grown to a cluster of about 40 machines: Job queue servers, importer servers, application servers, media servers, event forwarding servers; because we are hosing our infrastructure for our customers, we can afford to go the extra mile to do things technically interesting and exciting.

Speaking of infrastructure: We own the full stack of our product: Our web application, its connected micro services, our phone apps, our barcode reading apps, but also our backend infrastructure (which is kept up to date by Puppet)

While our main application is a beast of 300k lines of PHP code, we still strive to use the best tool for their jobs and in the last years have grown our infrastructure with tools we have written in Rust, Clojure, JavaScript (via Node.js) and of course our mobile apps are written in their native languages Swift and Java with more and more Kotlin.

We try to stay as current as possible even with our core PHP code. We have upgraded to PHP 7.4 the day it came out and we’re already running PHP 8.0 beta 3 in our staging and development environments, ready to upgrade the day PHP 8 will come out – those of us who write PHP are already excited about the new features coming to 8.0.

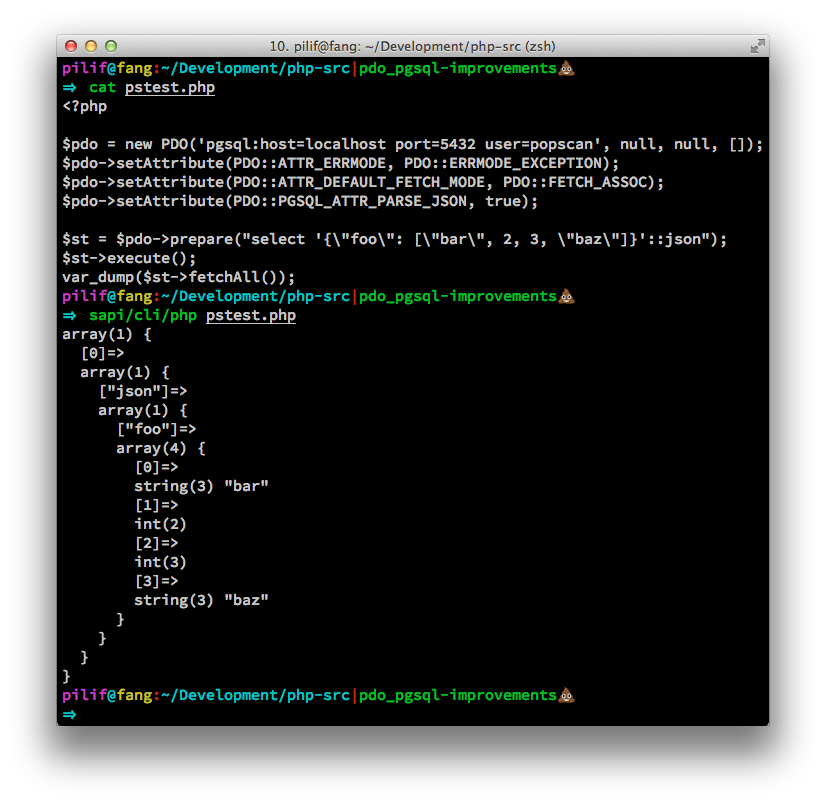

As strong believers in Open Source, whenever we come across a bug in our dependencies, we fix it and publish it upstream. Many of our team members have had their patches merged into PHP, Rust, Tantivy and others. Giving back is only fair (and of course also helps us with future maintenance).

If this sounds interesting to you and you want to help us make it possible for our end users to leave their workplace earlier because ordering is so much easier, then ping me at jobs@sensational.ch.

You should be familiar with working on bigger Software projects and understanding of software maintainability over the years. We hardly ever start fresh, but we constantly strive to keep what we have modern and up to speed with wherever technology goes.

You will be initially mostly working on our PHP and JS (ES2020) code-base, but if you’re into another language and it will help you solve a problem you’re having or your skill in a language we’re already working with can help us solve a problem, then you’re more than welcome to help.

If you have UNIX shell experience, that’s a bigger plus, though it’s not required, but you will just have to learn the ropes a bit.

All our work is tracked in git and we’re extremely into beautiful commit histories and thus heavy users of the full feature-set that git offers. But don’t worry – so far, we’ve helped everybody get up to speed.

And finally: As a mostly male team – after all, we only have one woman working on our team of developers, we’d especially love if more women would find their way into our team. All of us are very aware how difficult it is for minorities to find a comfortable working environment they can add their experiences to and where they can be themselves.